This is the second part in a series of posts about the networking architecture for Dragon Saddle Melee.

Basic Architecture

The core networking architecture in Dragon Saddle Melee is straightforward. Each new frame state, Sn+1 , is a function of the previous frame state, Sn , and the current set of inputs, Cn+1 (we’ll refer to them generally as commands).

Sn+1 = f( Sn , Cn+1 )

f( … ) is our gameplay logic that generates the new state based on the old state and the new commands. It’s critical to have that code truly only dependent on those two pieces of information. That will enable us to go back and resimulate any older frame by just keeping a buffer of old states and old commands.

The state and commands and gameplay logic are, of course, all game specific. In DSM they generally breakdown as follows:

State contains the position and velocity of all the objects (dragons, projectiles, loot, etc) and other gameplay state (various timers and flags).

Commands are the buttons a player can hit (joystick position, flap, use a weapon). For example, a command could be joystick-position is (0,0) and no buttons pressed; or, a command could be joystick-position is (-1, +1) and the flap button is pressed. In DSM, objects other than the dragons don’t actually have commands because their behavior is not influenced directly by player or AI inputs. For example, after a laser beam is fired, it is not controlled by the player or AI. It simply flies in the direction it was shot until it hits something or times out.

Gameplay Logic in DSM is a Box2d physics engine wrapped in logic that puts the appropriate forces and impulses on the objects. Box2d isn’t deterministic, which does add some complications. More on that later.

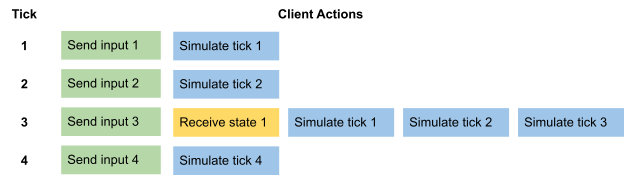

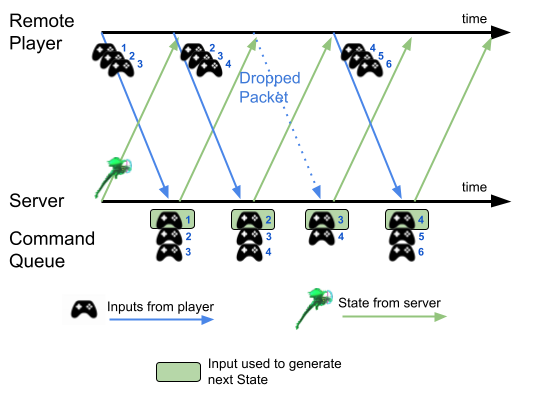

This leads to a basic networking architecture where each client streams their local player’s commands to the server. The server chugs along simulating each new frame with the commands it has received from each client and the previous state. As it simulates new frames, it sends the new states and associated commands to each client. Once a client has received these definitive states and commands from the server, they are old and refer to a frame in the past (eg, a frame that the client has already simulated). So the client rewinds its simulation (eg, “rolls back”) back to that old frame, plugs in the definitive state and commands, and re-simulates from that old frame all the way up to the client’s current frame. This propagates the definitive frame information received from the server about that old frame forward to the most recent frame the client has simulated, which is also the frame visible on screen.

When we talk about a frame, we’re referring to a frame in the simulation, not necessarily a rendered frame on the screen. The DSM simulation runs at a constant tick rate (usually set to 20 ticks per second). The visuals are rendered much more often. More on that later.

Getting back to the priorities we defined earlier, how do we minimize the hiccups with our own player’s movement? Hiccups will occur with that player when there is a mismatch between the state and command that the local client uses and the state and command that the server uses to simulate the same frame. The server’s simulation is constantly moving forward. It never rolls back. Once it simulates a frame, that frame state and associated commands are definitive and will never change. So, what happens when a command hasn’t arrived for a player when it’s time for the server to simulate the next frame? The server just has to make an educated guess. In DSM, it just reuses the previous command. At this point, there’s now a mismatch between what the player thinks he did that frame and what the server thinks he did. When the definitive state and command get sent to that client, the client will rollback and correct itself. This can lead to hiccups.

DSM mitigates this problem by having the server keep a short queue of commands for each object. The length of this queue is based on network conditions. When the server needs to simulate the next frame, it plucks the next command out of each client’s command queue. When clients send their frame’s command to the server, they also send several previous commands too, just in case the server never received those packets. Maintaining a short buffer of commands on the server and duplicating the transmission of previous commands reduces the likelihood that the server and client will simulate a frame with different commands for the object under the client’s control.

The downside to this strategy is that we’re purposefully introducing latency to the client’s commands. The server won’t actually use a client’s command until it has worked its way to the front of the command queue for that player’s object. For example, if we have a command queue length of 3 frames, each representing 50ms of simulation time, the server will be effectively putting a 150ms delay on that player’s input. Plus, the player won’t get the definitive results of the state associated with that commend for an additional amount of time equal to his network latency to the server.

So a given client ends up with an effective latency of:

Effective Latency = Command Queue Length x Tick Duration + Network Latency

However, this isn’t nearly as bad as it seems. The client doesn’t need to impose that command queue length induced latency on his local simulation. When he generates a command for a frame, his simulation can use it immediately. So if he hits the flap button to make his dragon move up, his local simulation will process that immediately. It’s safe to do that because the command queue makes it very unlikely that the server will issue a definitive command back to the client for that frame that differs with the client’s local simulation.

The extra latency does impact the client when it comes to the behavior of other objects. The commands for objects under the control of different remote clients will suffer the full effect of command queue latency plus network latency. The local simulation will try to guess the commands (by replaying previous commands). The server will also send each client the full command queue for all the other objects. For low latency players, that command queue may be long enough to always know in advance the command for that other object. Also, we can visually gloss over mis-predictions when we render things (more on that later). In the end, this isn’t terribly noticeable.

In DSM, we can also cheat in another way to hide this latency. In the game, dragons are either controlled by a remote player or are NPC dragons that are controlled by the server. For the NPC dragons, the server will use a command queue that is longer than a typical client command queue and network latency. The server will send this full NPC command queue down to each client. As long as this NPC command queue exceeds the command queue latency and network latency for that local player, the player’s local simulation will already have a definitive command for all the NPC objects. The only objects suffering the effects of the command queue latency will be other players. Plus, the server will also send the current command queue for each remote player down to the client, which could shave off a couple of frames of latency for those objects, too.

Next Up

That’s it for the second post in the series. In the next post I’ll talk about some gotchas I encountered implementing this networking architecture and work-arounds.

Chris

Developer of Dragon Saddle Melee

Co-Founder of Main Tank Software

February 24th, 2022